On "Closure: The Definitive Guide" by Michael Bolin

“Closure: The Definitive Guide” by Michael Bolin does a nice job on explaining a battle-tested, very complex tool that helps maintaining very complex JavaScript codebases manageable and understandable. Appendix B itself is worth the book, as it explains many of the JavaScript quirks to newbies and made me learn I was not as good as I thought I was. The world would be a better place if all JavaScript programmers read the appendix.

The rest of the book is what I expected - it teaches the reader how to build an application using Closure to your advantage, making your code more future-proof, browser-proof, more expressive, verifiable and testable. It covers the Closure Library, the Closure Compiler (a tool able to compile your JavaScript code into very compact JavaScript and Java that runs on your server - and, maybe, your mobile too), templates, widgets, AJAX, automated building and debugging. While we learn all that, the author also teaches us about the process of making a Google-sized web application. If you are at all familiar with JavaScript, the idea of doing a huge application is terrifying. After reading the book, while still scary, at least if feels possible to mere mortals.

If you are feeling the pressure of maintaining a big JavaScript project and is considering selling management the idea of migrating it to a JavaScript framework that encourages good practices (JavaScript makes it very easy to shoot yourself in the foot), this may be the book for you.

You can grab it directly on the O'Reilly web site. The e-book version comes without DRM and has Kindle, ePub, PDF and Android versions.

Wikileaks and its followers (1 every 8 seconds)

After playing a little with the Twitter API, I built a small tool that helps me keep an eye on some indicators. Today, I turned its sights on the @wikileaks account on Twitter and got this:

I may be wrong (it's late and I am tired and I don't trust my math at those times), but it seems like @wikileaks is gaining about 1 follower every 8 seconds or so.

To give you an idea of what these numbers mean (and, being measured on a Saturday night in most of the Western world, I would consider them to be very conservative), keeping this rate, they will cross the million follower threshold in early-February. I will measure the growth rate again during the week and for a longer period to get better numbers, perhaps getting a nice curve. I also expect the media circus around Assange to get larger as he fights extradition and as Wikileaks releases more leaked documents. My gut feeling tells me they will cross the million follower mark in early to mid-January.

For something so many people want to silence, that's quite impressive.

Wallpaper for my Nook

So, once again I find myself musing with Inkscape rather than doing useful work (for rather narrow definitions of "useful" and "work", that is). This time, I decided to create some wallpaper for my Nook.

Here it is:

Please enjoy it. It's rather easy to turn it into a screensaver (did this for my Nook) or to make it useful on a Kindle or Sony eReader. Even iPad users may find it interesting, although they may find the lack of halftones and the 2D look somewhat primitive by their standards.

I also hope whoever holds the rights for the logo (it's from the movie) don't mind me using it. If you do, please, leave a comment and I will remove this image from the site as soon as I read it.

Hold different

This time, inspired by eddieplan9, one for all iPhone4 users who have experienced reception problems due to the way they hold their phones.

They had it all

I like to remind my younger peers that technology does not show a steady progression pattern. In the past we can see long forgotten examples of ideas were not only ahead of their time, but that are still ahead of our own. PLATO was such a thing.

In the 70's they had social media, collaborative editing, wide-area multi-user games, hypertext, instant-messaging and e-mail. And they had it in graphic terminals that showed pictures we would not be able to see on a desktop computer until the mid-80s.

They had it all.

Enjoy.

Linkbait and exaggeration

Every day I see lame articles I feel no reason to respond to. Yet, there are days I come across stuff that really demands an answer, even if it is for no other reason than to give some enlightenment to its readers and for the people that pointed me the article.

Today's example is a piece on ZDNet, "They exist now only in the minds of fanbois", that tries to examine a couple things that some consider to be myths while, at the same time, taking a jab and provoking fanboys of all types. Unfortunately, it falls short due to a certain degree of exaggeration and more than just a little eagerness to generate traffic. Let's see how it works.

"There’s a war between Microsoft and Apple"

He got this one right. Apple and Microsoft are companies, not countries. They compete in several areas, collaborate in others and will do whatever it takes to turn a profit and to protect their brand equity. As much as I regard collaborating with the backstabbing folks at Microsoft as foolish, Apple is big enough to survive. And has some previous blunders to learn from.

"Year of the Linux desktop is coming"

I think this one is pretty irrelevant. The age of the desktop is quickly fading. At home, the last desktop PC we had was donated to charity. I will not give up the mobility my netbook affords me, as much as my wife won't give up her Macbook. If you define "Linux desktop" more broadly, there will come a time people will use more and more mobile devices and, then, as the traditional desktop computer fades away, smaller devices will replace them, piecing the data they show on theit GUIs from remote servers. Interestingly, nobody does and nobody will care much what OS runs such devices. Right now the hype points towards the iPad, which runs a flavor of Unix. Android devices run a flavor of Linux which is, kind of, another flavor of Unix. Since the whole question doesn't make sense, I will give him the point - Linux will not dominate the desktop. Not that anyone will care.

One note here: that excludes my collection of interesting computers. There are many desktops in it and I will not part with them anytime soon.

"Open-source = secure code"

Of course not. Simply opening up crappy code will not make it secure. But, look closely: this guy is dealing with an absolute. Open-source is no silver bullet. However, the fact the code is easily accessible and fully disclosed, ensures that security problems can be addressed as soon as they are found and not when PR dictates they need to be solved. When the source is secret only the manufacturer - the one with access to the source - can correct the problem. Even if it's hurting you or your company, you can't just hire a consultant to fix it.

"You are safe from malware on a Mac"

Again, absolutes. No. You are not completely safe from malware on a Mac, but the point that should be made is that you are much safer from malware under OSX than you would be on a Windows box. At least, if for nothing else, because most malware targets Windows, not OSX. The article is correct when it says current malware relies heavily on a clueless user, so, you should as well get a clue. Malware is not, however, platform neutral and the platform to avoid for malware reasons is, definitely, Windows. I would also like to point out I use a package-based OS and I am much safer than Mac users (and orders of magnitude safer than Windows users) because all the software on my machine was delivered in cryptographically signed packages securely fetched from trusted servers. It's kind of an app-store, but without the hype. And happened a good couple years before Apple "invented" the iPhone app store.

"Users don't like walled gardens"

They shouldn't, but they do. It's a sad fact of life most people are dumb. Most users just want to use their gadgets and really don't mind to be forced to buy their stuff from a single supplier or to give up choice. Or to have their copies of 1984 removed from their devices. In the end, giving up control will come back to bite them. Maybe they will learn. Who knows... I don't care.

"Apple/Microsoft/Aliens paid you to say that"

Microsoft has been known to hire PR agencies for astroturf campaigns. Examples for Microsoft, some comical, abound. Apple is a more interesting subject. Since so many of their users are so amazingly passionate, I doubt it has to pay for them to defend the brand and its products. Fanboys, of all flavors (even Microsoft has them, believe me), live in a reality distortion field and no RTF in this industry is larger than the one emanating from Cupertino. Editorial interference happens - when Microsoft (or Oracle, or IBM) threatens to withdraw its ads, many publications listen. And let's not forget some blogs are openly not neutral and cater to supporters of a specific agenda.

Duke Nukem is coming back!!!!

Duke Nukem never went anywhere (and he has a link to prove). If he was mentioning Duke Nukem Forever, well, that one will, eventually, come up. This is one joke that won't die easily.

A conclusion

This is one article where I can't say he is completely wrong. However, hist broad claims are evidently aimed at generating controversy by being misread. It's, therefore, bad journalism.

And, since I am claiming it's wrong, that will label me as a fanboy.

How to completely wipe out mankind

I just came from Hacker News, where there is a short discussion on how many nukes would it take to wipe out humanity. This is an expansion of my post there.

The original article reaches the conclusion we don't have nearly enough nukes. I disagree. It's a matter of using them efficiently.

I guess a couple hundred average nukes would be more than enough to nudge a passing rock (there are many readily available) into a collision course to Earth. Better yet would be to use the fissiles to power a mighty big NTR attached to a comet and use its own water as propellant. This would be a lot more discrete than a big blast, may provide for a quicker intersection and also add a nice oomph to the comet for when it hits the ground. I would also suggest hitting the Atlantic or Yellowstone as nice strategies for maximizing destruction. Hitting Europe could yield the maximum number of immediate deaths, but the other two may provide a larger overall devastation. I bet a comet hitting a supervolcano is a very memorable event.

And nothing, apart from the supervillain's budget would prevent the use of more than just one comet. A string of fragments, like SL9, could rain death very evenly across all inhabited places of the Earth.

In the end, it all depends on how long you are willing to wait until the last human is dead. If you require them to be all vaporized by the end of the afternoon, then, perhaps, we don't have nearly enough nukes. If you are a patient villain, you could use your resources far more efficiently to first render useless a huge part of our infrastructure (with EMPs from high-altitude detonations), then ruining food supplies (even small nukes could start fires) and only then using the remaining firepower to wipe out whoever is left after they have had a couple years to restart civilization and concentrate a bit.

It seems very doable. In fact, we may even be able to wipe out humanity without using any nukes. I regard some politicians as much, much more destructive than simple nukes.

We build what we love (or "how to save us from an x86-only world")

We, software creators, work on what we love.

Of course, we also work on our day jobs, on products we are told to build, with the tools we are told to use. We have to.

But we also work on stuff we like with the tools we choose. We play.

And this is, often, the very best of our work.

Insufficiently advanced technology

In the past couple years, we have seen the development of an astonishing variety of interesting microprocessors. I am not talking about the latest x86 processors, mind you, as they are not particularly exciting (that "cloud" thingie excepted, perhaps). Just about every desktop computer or laptop around us employs an x86, often to drive much more powerful (and elegant) processors that have this lesser roles because... they are not x86s. And because they can't run Windows. Or Flash.

We can see a future in those interesting processors. Both POWER7 and Niagara 3 are amazing machines. We can't, however, see far into their future. These processors are being deployed on servers, often on remote datacenters we never get to visit, to run applications other servers like them already run. They are in the dark realm of legacy.

Running those apps is an important job, don't get me wrong. I like my airline tickets and my hotel reservations properly booked.

But this is the last step towards extinction. When all you run is software developed for your granddaddy, you are pretty much a doomed architecture. You may live long on life support, like zSeries mainframes (also amazingly cool boxes) and COBOL (nah... not so cool, at least not now), but even them will eventually be mothballed. Let's face it: how many new customers they have? How many companies that run x86 servers decided to buy their first POWER or SPARC box in the last year? How many applications that run only (or, at least, better) on non-x86 boxes were developed in the last couple years?

Extinction is a very sad event. It is sad when it's an invertebrate species (perhaps less so when it's a nasty virus), but it's also sad when it's a technology. We have seen this with moon rockets: if we are to get there again, we must, more or less, start from scratch. We just can't build a Saturn V anymore. Soon enough, we won't remember what made SPARC, MIPS, POWER or Alpha special just like most of us never heard of a Transputer or many of us who think Windows XP Professional x64 Edition was the first 64-bit OS (clue: I played a lot with a 64-bit box in the mid-90s and I was 30 years late).

A bleak future

Nobody with enough knowledge to emit an intelligent opinion likes a PC. It's a kludge: a matrioshka of each and every IBM desktop computer down to an IBM PC 5150. Your Core i7 processor powers on thinking it's an 8086 and quite probably goes on initializing its ISA bus, the serial ports, timers... It's nightmarish. If I were such a processor, I would jettison my heat-sink and die the hot death of inefficient power dissipation. You can probably run PC-DOS natively on them (in fact, Dell sells boxes with FreeDOS for those clever enough not to pay the Microsoft tax).

And that's where we are headed to. If nothing happens, 10 years from now that's all you will be able to buy. A wicked fast kludge.

AE101 - Avoiding Extinction 101

If these notable architectures are to survive - and survive they should - we will need computers we can play with. Computers we can love and use in our everyday lives, not remote boxes in datacenters we deploy our legacy applications to. We need to run our Gnomes and Firefoxes on them. We need desktops. We need workstations based on these chips. We need to be able to run their OSs not only to host our legacy applications, but also the apps we would like to build that are unreasonable to build on a desktop PC. Free and open-source software provide an important, smooth, migration path between different architectures. For those who forgot a couple years ago Macs ran on PowerPCs the same OS they run now, it's proof enough to see Gnome running on ARM-based gigantophones and smartbooks. It works and it works well.

And provides a safe bridge.

Unlimited power

I am writing this for two reasons. The first is that I like diversity. Diversity and selection are the tools of evolution, Unfortunately network effects have robbed us of the first. Network effects naturally favour the majority and should be limited if we want true evolution. It's effects are felt on desktop computers mostly because of non-portable 3rd party software. The same is happening with smartphones, with ARM on iPhoneOS duelling Android for survival. Diversity should be reintroduced in our environment whenever it starts to falter. In order to maintain diversity, I want other chip architectures to succeed. I want POWER and SPARC, but I also want new architectures to be developed. I want neural networks and reconfigurable coprocessors. The less dependent we are on the x86 binary architecture, the easier it is to make that happen.

The second reason is far more selfish. I want one such box for me.

I want to have one such box. More important: I want the best software developers to have them (since I am not one of them, my power to effect change is very limited). I want them using those machines every day for everything they use computers for. I want them to love those machines and to play with them. I want to leverage this love and turn it into change.

Because we need them playing unrestrained by the legacy technologies that cripple today's PCs and binds them to roles that were obsolete decades ago.

We need our best people inventing the future.

I have no idea of what could come out of this, as much as my ancestors who walked their way out of the Middle East into Europe armed with little more than sticks had no way to imagine we could one day send people to the Moon.

Evolution needs a kick. Let's kick it.

An important note: with luck, this article will start an interesting discussion here. The site is minimalistic, but the folks who hang around there are great.

Not sad at all

Antony Satyadas, on a blog entry at developerWorks, said something that I find interesting.

It's interesting because it reflects a pervasive, and incorrect, point of view regarding past performance of Microsoft.

The entry is here and refers to two other articles with the second one creating somewhat of a stir among those interested in our craft. I won't quote much (because there isn't), but I'll comment on a few of his quotes:

"The future of America is presently in peril, not just because of the

shadowy ways of the "banksters," but because of a sputtering innovation

engine that's had the fuel choked off"

Gordon T. Long, former IBM and Motorola exec

I am not surprised, and I kind of agree with his point. Far too much is made elsewhere and the knowledge to design stuff is not that much important (or relevant) if you lose the capacity to build the stuff you design. Manufacturing capacity is not something you rebuild overnight. Although it seems true the US has a serious problem at hand, that's not my point (I don't even live in the US, I just like you guys and wish you the best). My point is far less important, but, I hope, much more interesting.

"But the much more important question is why Microsoft, America’s most

famous and prosperous technology company, no longer brings us the future"

Dick Brass, former Microsoft VP

I am not shocked Dick Brass thinks Microsoft used to bring "us" the future. He worked there after all bringing whatever passed for the future to whoever passed for customers. What I am surprised is how easily one forgets the past. Microsoft's first product was a version of the BASIC programming language (something that already existed) for 8-bit microcomputers (something someone else built). Mind you - the only future they were bringing was a smaller, less capable version of something mini-computer owners already had. For a couple years.

Microsoft's only true paradigm-shifting early moment I can remember is Bill Gates' "open letter to hobbyists" that complained about people sharing software (some of it copyrighted and not targeted for sharing, but for selling). While he was right people should not pirate the software he wanted to sell, I like when people share what they do. Actually, I am using the end result of such sharing right now. And, if you are reading this, so are you.

One could think of the Z-80 SoftCard (I have two of them in working condition) as innovative, because it allowed an Apple II to run CP/M software (the OS that ran most of Microsoft's offerings). It was good, but was it "innovative"? Remember: at that time, computing was much more diverse (less boring) and machines with more than one processor, if not the majority, were not uncommon.

Then one could think of MS-DOS, except for the fact that it was not created by Microsoft. And, while we are at it, it's more or less a CP/M knock-off written for 8086 processors. It was also horrible to use (but, in that regard, CP/M was not very impressive either). Even at that early age, mostly silent Apple IIs humiliated bulky and noisy PCs in terms of ease of use. Apple's DOS 3.3 had long (33 character) file names. In 1978, IIRC. PC users had to persevere until the early 90's to finally have them.

MS-DOS beget Windows, which was more or less a cheap knock of of various concepts (the Xerox Alto family, the PERQ, the Lisa and the Macintosh) that ran on lesser computers. If a PC was "legacy" in 1984 (Windows was eventually a hit because it ran on computers people already had), the fact I am writing this on one (even if it's a multiprocessor sub-notebook running Unix) is inexcusable. This legacy held back progress in ways far too disgusting for me to properly discuss here.

"Microsoft has become a clumsy, uncompetitive innovator. Its products are lampooned, often unfairly but sometimes with good reason."

Sorry. It never was anything but clumsy and uncompetitive. Their first market breakthrough, with MITS BASIC, was achieved through bundling a copy of BASIC with every MITS computer. They made one sale: to Ed Roberts. Their next one, was with MS-DOS coming bundled with IBM PCs. They achieved market dominance through deals with manufacturers, not by providing superior value to customers (although they do provide some value - mostly comedic, IMHO). Microsoft has always followed the safe path first traversed by others and then taking the easy short-cut, usually through the boardroom.

"Microsoft is no longer considered the cool or cutting-edge place to work. There has been a steady exit of its best and brightest."

I am embarrassed I did, in the late 80's and 90's, entertain such fantasies. I really thought Windows was cool. That NT was cutting edge (it was, after all, one of the first products to announce - but, IIRC - not to deliver - multi-processor support with multi-threading) and that Microsoft could be the right place for me. But at that very same time, I was getting enlightened. I saw Alto (in BYTE issues, at leat), Smalltalk (I bought the blue book), Lisp (it felt funny at first), and various unixes, from AIX to Solaris. How liberating for a computer guy to arrive at the simple idea that something is ready not when there is nothing more to be added, but when there is nothing more to be removed. Form and function in perfect elegance.

Because Microsoft is seen as a successful company, many people feel compelled to revise the past (to be fair, not everybody has been hanging around since the mid 70's) to make it more brilliant than it was. They were good. Not, perhaps, as good as the Bell Labs folks. Not nearly as good as Xerox. Certainly not Jobs-and-Wozniak good. But good does not translate into dominating the market and dominate the market they do. You don't have to be that good. Being clever and relentless and adequately connected will, eventually, be enough.

And so, I am not surprised Microsoft is now perceived un-cool and un-innovative. Like its products, it has become bloated and slow, as any monopolist is, and even more reluctant to innovate than it ever was, as any monopolist is expected to be. When your strategy is winning, you don't change it on a whim.

This will, eventually, bring its demise, as it brought it to IBM before (by the hands of none other than Microsoft - oh the irony).

In the meantime, I have popcorn and I am watching.

Good news - an airplane perspective

My wife and I are spending the day in Rio, with her parents, and she was using one of the computers in the house, a Windows Vista machine, when she read a message aloud for me: "Display Driver Stopped Responding and Has Recovered". Wow! That's good news, right?

Well... There is little point in telling a user who supposedly has no way or interest (or, in some countries, the permission of the law) to even wrap his or her head around what's happening inside the computer (we are talking Windows, a. k. a. NewbieOS, here) that there was a problem that, whatever it was, is no more.

Just imagine a pilot announcing, in the middle of a cross-country or, better, transatlantic, flight, that "Our starboard engine stopped responding. It experienced a flameout and when we tried to restart it, there was a fire, possibly due to an intermittent fuel leak. Our fire suppression system worked as expected and the engine has resumed normal operation. Have a nice flight".

Seriously, what is the point of telling that to a user? Something like "Look: your computer is crappy and some parts of it tend not to work so, the next time Windows blows up, it's the manufacturer's fault, not Microsoft's"?

Anyone wants to volunteer an alternative explanation?

A picture for users of lesser OSs

All in all, GNU/Linuxes are pretty mundane operating systems. There is nothing too fancy about them - It's more or less a collection of operating systems good ideas (Andrew Tanenbaum will never read this, fortunately), rolled out as a kernel (Linux itself), with a very polished userland (GNU, plus other programs that particular distros select) on top of it.

Its roots date back to the 70s, to Unix - it was made to its image. Current versions of both are quite similar and a Linux user will be pretty much at home on OSX, BSD, Solaris or AIX.

But those 70's ideas do not mean Linux is an old-fashined OS that brought nothing new to the world of operating systems.

One of the nicest things GNU/Linux introduced is comprehensive software package dependency and update management. With it, if you want to install a program, you can pick it from a list and, like by magic, all libraries, resources and everything else the program depends, plus the program itself, are installed. No need to browse the web after an installer, no need to run programs as a super-user, nothing. Everything quick and simple. And then, when the time comes a new version of something in your computer becomes available, the machine warns you and prompts you to install it, regardless of where it came from, as long as its publisher is registered with the software management system (like the Chrome browser and the VirtualBox VM tools in the picture you see, as well as Skype, which you don't see in the picture because mine is up-to-date). Software components are neatly divided in packages that depend on each other. Need a DVD burner? Codecs will be downloaded with it.

And then, when something becomes unnecessary or obsolete, the machine offers to delete it and conserve disk space.

Other operating systems attempt to accomplish the same with a variety of tools, but none, perhaps with the exception of OpenSolaris (because they hired the guy who designed Debian's package management), has anything that comes even close.

Cool, isn't it?

A bit of vaporware (or "Microsoft's Secret Newton Killer")

One of the funniest things about Microsoft is how predictable they are. Each and every time they perceive a threat to their cash cows - be it Windows, Office or completely new models of software distribution, they have the power of concocting an underwhelming and barely credible product that is either utterly fictitious, as to damage the sales of their competitors that actually have taken the time to develop real products, or is so infuriatingly flawed that it hampers the credibility of the whole model its competition is trying to steer the market towards.

I first observed it with Windows for Pen Computing, a response to the Newton, to the Momenta and to the GEOS-based Tandy and Casio über-PDAs. Then there was the Cairo/WinFS database/file system that was never delivered, a more generic confusion tool for the times some other vendor promised a better way to manage data. It span decades without as much as a working prototype.

I also remember the flurry of multi-touch things after Jeff Han demo went viral. From Surface to silly interaction on a precariously balanced notebook screen. There was a video of that one here, but Microsoft canned Soapbox as soon as they realized they could not compete with a Google-backed YouTube and the video is toast.

More recently, we saw Project Natal overpromise a sci-fi worthy way of interacting with games, complete with a special-effects covered video, over the more realistic and obviously less impressive offerings from Sony and Nintendo that were actually being launched. Did you see articles on the stuff being introduced at the same show? Me neither. It was all Project Natal.

Milo and Kate is quite impressive, but if Microsoft can do that, I don't know why they are wasting their time launching Windows versions - they could release a notebook version of HAL-9000. Or Skynet.

And now, under the buzz of a gigantic iPod Touch, an iNewton or whatever the Apple tablet may be called, Microsoft shows this: the "astonishing" (according to Gizmodo) Microsoft tablet, with software working so well you can't possibly trace its Windows heritage.

It's like Apple pretending the Knowledge Navigator was to be a real product about to launch instead of a fancy concept.

But, again, that's the Microsoft and that's why we love them.

At least I do. They make me laugh.

And, just to finish it off, the classic video of the Longhorn PDC2003 video. Unless you want to be disappointed with Courier or Natal, consider how this video relates to the actual shipping Windows Vista:

Monkey business

Ah... The stuff you find in your DNS logs...

Sep 5 07:21:03 heinlein named[1825]: client 200.171.10.117#1481: query (cache) 'www.itau.com.br.planetofapes/A/IN' denied

Would anyone with über-sysadmin superpowers care to explain what that means?

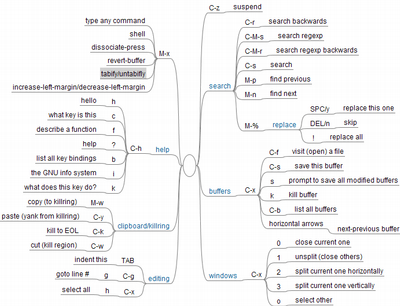

An Emacs cheatsheet as a mindmap

I have been using Emacs for some time now. It has a very steep learning curve, but its power and elegance make it my editor of choice for just about everything. So, inspired by this article, I decided to create my own Emacs cheatsheet. There are many Emacs cheatsheets, but all of them use a tabular format that is not, in my noob opinion, the best way to convey such information: you can interpret the Emacs commands as a tree-like keystroke structure and many important commands use two or more steps.

I started a mind-map for the keystroke trees with the commands I use the most (and some of the ones I find the most amusing). The plan is to make a navigable cheat sheet like the Mercurial and Git ones you can get here and here, plus some tips on what to add to your ~/emacs.d/init.el file.

You can get the very, very early version of the mind-map (in Freemind format) here or just look into the image that follows.

All the heavy magic is also missing, like the "smart paste" Marco Baringer does about 1:45 into the What is Ajax screencast that relates to the David Crane's Ajax in Action

book (that I still don't know how is done).

I would appreciate any advice from Emacs veterans and newbies alike, so, feel free to comment.

Editor nirvana

To say GNU Emacs is merely a text editor is an understatement. Ever since I decided I would learn to use it (out of a never quite accomplished mission of learning Lisp once and for all), it impresses me almost on a daily basis.

Yesterday, while playing with my choice of screen fonts for the editor (something every bit as important as choosing one's text editor), I discovered two pop-up menu options, to increase and decrease font size. A little playing with Meta-X and I arrived a couple functions, "text-scale-increase" and "text-scale-decrease". A little more digging brought me to the key combinations "Control X Control plus" and "Control X Control minus" sequences. Usable, but I wanted something easier to type.

Few non-Emacs users appreciate the fact Emacs has no configuration file. What it has is a program, in its own Lisp dialect, that's executed every time the editor is started. Within this program I can define new functions, load external libraries and even write a credible implementation of vi. This time I made two simple edits to my init.el file that added two new key bindings:

(global-set-key (kbd "C--") 'text-scale-decrease) (global-set-key (kbd "C-+") 'text-scale-increase)

The first one binds the "Control minus" key combination to the text-scale-decrease function (that decreases text size) while the second binds "Control plus" to the opposite text-scale-increase function. Easy enough for me. Now, every time Emacs starts, it has a couple bindings extra key bindings (on top of all other already added by loading external libraries, modules and so on) that make my life more convenient.

And this concludes my Emacs praise of the day. Thanks for coming.

I really want to like Apple

I do. Seriously.

I loved my Apple IIs passionately. I love my Macintosh collection (from SE to Bondi-blue iMac). While not being a heavy Mac user, I keep a Mac on my desk as a second computer to my main computer (a netbook running Linux because I like carrying it around, because it's cheap and because I like Linux better than OSX for work), I still like Apple's products and recommend them when I feel it's appropriate. For instance, when my then fiancée wanted a new notebook, I convinced her she would be happy with a Macbook, and so she is. She even married me after that.

But I don't think Apple loves me. Or, by the way, any of their lovers.

One cable

Some time ago, I bough an iPod Touch. I was about to build an application for it, and, so, I needed one. I quickly fell in love with it as a media player as well as a über-PDA with web access. At the time, there was no iPhone SDK and the project was canned, but, by then, I already liked the iPod pretty much. The cancellation was also fine by me because, while Objective-C is a much better idea than C++, being better than C++ doesn't say that much. Still, I kept the iPod and soon listening to audio podcasts became part of my morning routine as much as watching the video ones became a lifesaver when our weekly air-traffic-control meltdown left me stranded on some small airport with no wireless access.

So, it was only natural for me to buy a cable to hook it up to my TV.

Despite the size of Brazil's consumer market, there are no Apple Stores here. Many people attribute this, along with what appears to be active sabotage by Apple, to its deep hatred for the only country that had a company that dared to attempt to produce a Mac-compatible computer (that's one ugly story). fortunately, there are some companies who decided they would try to cater to the unrequited love Apple turned its corporate back on and build the stores themselves. So, I went to the next best thing: the local "a2you" chain of stores and got myself a composite cable.

It worked beautifully.

I mean... The user interface is really horrid for playing videos and watching them from the couch. Unless your videos happen to be long enough, you will have to play each one from the iPod itself. There appears to be no video playlist thingie anywhere on the iPod. Audio went just fine, but not video.

Then I got involved in another project involving podcasts.

So, the time came for me to update the iPod's software. It is a first-gen iPod Touch that came with 1.0 software (update to 1.1.5, if I remember correctly), so I created an account on the iTunes store and downloaded the software. OS 3 is a nice improvement over 2.x and is a huge improvement over the 1.x I was running. It's not as snappy as the 1.x (it really seems built for the "S" iPhones) but it's bearable.

But it had one unwelcome side effect: my composite cable no longer works.

DRM (as in Digital Restrictions Management)

I mean, it does, then it doesn't.

It's not a cable problem. It worked flawlessly with 1.x. It still works on 1.x units.

The problem seems to be the software. It, apparently checks if the cable is made by Apple and then, in the middle of the playback (just demonstrating the cable works perfectly), it freezes the video and spits a "This accessory is not compatible with this iPod" or something like it. Well... It's a cable! How incompatible with something can a cable be? Is it a DRM issue? Is it built-in for future HD iPods to render cables that do not provide copy-protection useless? It's an analog cable! The lowest-quality one! Who would consider using it for piracy? And to pirate what? TED lectures? Episodes of Cranky Geeks? Conference presentations? It's much easier just to rip the DRM off the original file (and there are many automated tools for that).

This and the recent Amazon Kindle problem - Jeff Bezos can apologize as much as he wants, the ability to remove content you already purchased is still there and can be abused anytime Amazon feels like breaking promises - got me really weary of DRM. Even for single-purpose devices I buy for a single purpose, a software update can break the hardware I already own, even something as simple as a cable. It's not my device if Apple can do things like this with it. I may possess it, but, in reality, it belongs to, and obeys, Apple. If Apple decides to brick it, bricked it will be.

Then, there is also the shady process Apple uses to approve applications. It's not in the best interest of their customers to Apple to have a stranglehold on applications for the platform. I understand they want quality control, but customers may want to circumvent that control for their own uses. Or because they have different ideas about quality.

Defective by Industrial Design

You know... The Apple tablet the Financial Times seems to have confirmed today looks sweet. I would love to play with it. I would even consider buying one, but I won't. The point is, as much as I like Apple's attention to detail, its outstanding industrial design, I can't justify buying a product that's not really mine. Call me spoiled, but using stuff like Linux made me feel I am really in control. The netbook is mine, and nobody will make my computer do something I don't approve. If it ceases to work, it will be my fault.

So, if Apple would please unbreak their software ecosystem in a way it doesn't actively try to screw its customers, I may consider buying a tablet (or an iPhone or even another iPod touch for the day this one dies).

But recovering the trust I had on their attention to their customers will take some time.

An Interesting Update

I must confess I did not search the web (or Apple's forums) before either upgrading or buying the cable (that one was not my fault, as it worked by the time it was purchased and stayed that way until a couple days ago), but, because someone reminded me I could do that, I googled for it and found this:

http://discussions.apple.com/thread.jspa?threadID=2046835

So, at least now I know I am not alone with my cable problems. And, finally, I am convinced it's not a cable problem and not a connector problem, but a vendor selection problem.

Also, for some time, a lively discussion about it, and, perhaps, my scientific method, happened here:

http://news.ycombinator.com/item?id=726922

Hacker News (the site at ycombinator.com) is considered a Troll-free zone and I wish it to remain so. Please, if you want to participate there, mind your manners and read and obey the guidelines.

Subtleties

A couple days ago, while watching a re-run of Gattaca, I noticed something that escaped me the last couple times I watched the movie (yes - I watch good movies more than once).

In the gym scene, detective Anton says to director Josef "I am curious". Then we are given a brief glimpse into the director's soul when he assumes this is not about the ongoing murder investigation but rather a comment on Anton's own nature, and praises it as this is a very useful trait in his profession.

Writing a screenplay like this is building people word by word. While part of me was happy for finding this small pearl, another part of me felt truly saddened for the director.

I just thought it was worth sharing.

The unbearable kludginess of Windows

That's another one for the "a picture is worth a thousand words" series. When I read Zombie Operating Systems and ASP.NET MVC, I couldn't believe it could be that bad. Then I started Windows 7 on a VM and checked it out.

This is what I got:

Parodies

I seldom do this - just embedding a video - without an article around it, but the subtitles say everything I could ever hope to say.

You can find the original here.

Previous:

Five reasons why this developer won't switch to Mac

Previous:

Five reasons why this developer won't switch to Mac